Artificial General Intelligence.

When we hear the term Artificial Intelligence (AI), we got a predefined picture of a scenario where people try to simulate the mechanism of human brain with the power of coding and machine technology. Well, the field of AI is still immature and we still have to do quite a work to make our machine to think like humans, but still, this field emerges as a big player in recent years. As every big company (like Google, Amazon and Huawei etc) focus their aim towards making AI more accessible to common people, AI became a crucial part of our lives.

We have robots, that can show emotions, that can understand what the other person is saying in front of them and respond accordingly and we have robots that can even ask question to other person based on his/her mood at that time. Google, with the help of Boston Dynamics, achieved high milestones when it comes to programming robots.

The most common use of AI that we have seen very often is in games, though it's a very basic AI but still, it's present here from quite some time.We use Smart Assistances (like Microsoft's Cortana, Apple's Siri , Google's Google Assistant and Amazon's Alexa) on the daily basis and they too work on the principle of Artificial Intelligence.

So, when we are living in the world where AI is present from recent years, what is the thing that's restricting us to achieve full simulation of human brain? I would like to talk about those factors here and also want to talk about why the area where we are heading is not entirely correct.

We have robots, that can show emotions, that can understand what the other person is saying in front of them and respond accordingly and we have robots that can even ask question to other person based on his/her mood at that time. Google, with the help of Boston Dynamics, achieved high milestones when it comes to programming robots.

The most common use of AI that we have seen very often is in games, though it's a very basic AI but still, it's present here from quite some time.We use Smart Assistances (like Microsoft's Cortana, Apple's Siri , Google's Google Assistant and Amazon's Alexa) on the daily basis and they too work on the principle of Artificial Intelligence.

So, when we are living in the world where AI is present from recent years, what is the thing that's restricting us to achieve full simulation of human brain? I would like to talk about those factors here and also want to talk about why the area where we are heading is not entirely correct.

The views, that I am going to present here are not solely mine. I am writing here what I have read and understand and think that is correct to my belief. Everyone is free to make their own decisions. With that being said, let's get started with the topic itself.

What is Artificial Intelligence?

Artificial intelligence (AI), sometimes called machine intelligence, is intelligence demonstrated by machines, in contrast to the natural intelligence displayed by humans and other animals. In computer science, AI research is defined as the study of "intelligent agents": any device that perceives its environment and takes actions that maximize its chance of successfully achieving its goals. Colloquially, the term "artificial intelligence" is applied when a machine mimics "cognitive" functions that humans associate with other human minds, such as "learning" and "problem solving".

Basically, with artificial intelligence we try to create some "intelligent" agents, that can understand the inputs given to it, make decisions based on the input and the environment that it can see, and then intelligently gives output.

The inputs that we give may be given in either a trained environment or untrained environment. Then agent try to match the given condition to the conditions present in it's knowledge base, after doing some refinements through neural networks, it can give us a output that, according to that agent is meaningful for the given input.

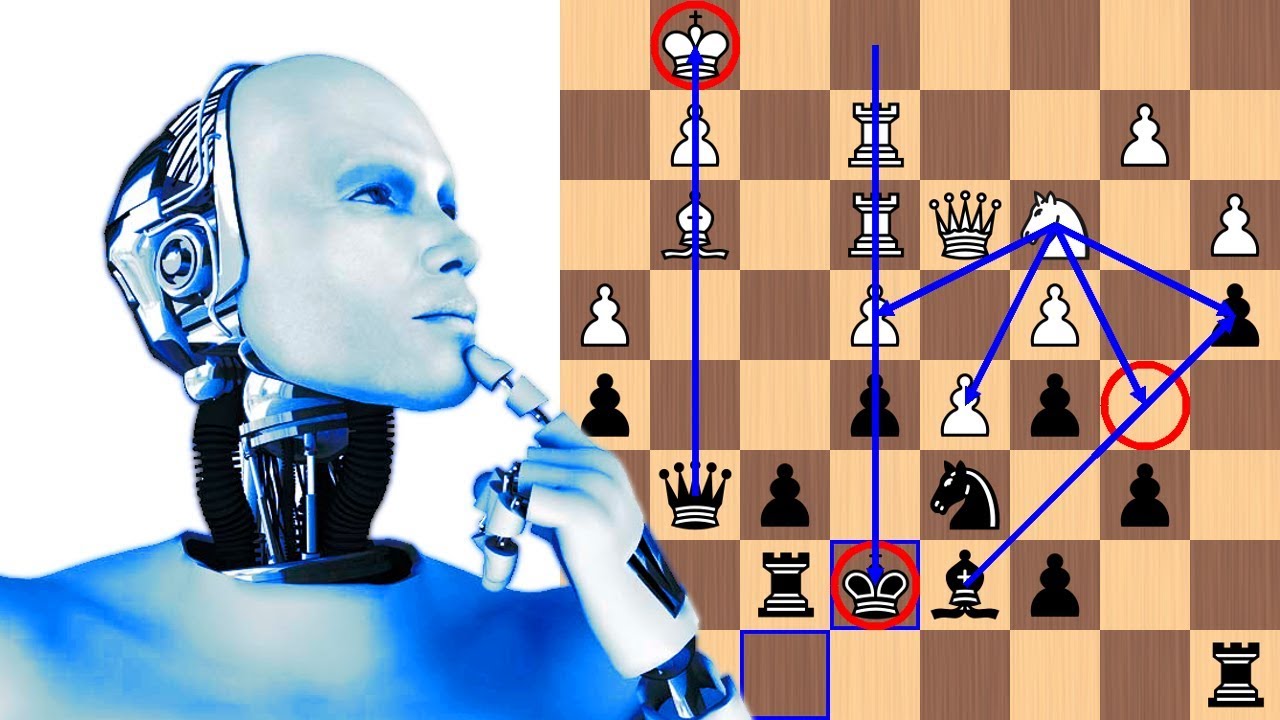

This form of intelligence shown by machines is very limited in functionalities as it highly depends on the input and the knowledge base of machines. Without the correct input given to machine, it's impossible for it to get into any conclusion. As an example, we can consider a game of chess. Agent can show it's intelligence on the game with constrained environment of chess board and input given in accordance with the rules of chess. Once you throw a random input that is outside of the scope of the environment (here, chess), it fails to recognise it and either seize or do nothing (become dumb). This is not how human react to the given inputs for the recognised environment. And the main aim of AI is to simulate human brain.

Then how we are achieving the main aim of AI? Are we really doing work to achieve simulation like human brain or we are just making machines to respond to our inputs intelligently. This is the question that makes AI a difficult domain. Even though we can achieve some temporary intelligence in machines, we are still far behind in achieving full human brain simulation. The AI that is presently known is based on 'statistical learning' which is good for finding what is common but poor at finding what matters. To find what matters, we need model free or Holistic intelligence.

Though chess and speech recognition are considered as a part of AI, Optical Character Recognition (OCR) isn't.

What is Artificial General Intelligence(AGI)?

"Logical reasoning is slow and our brains spend only about 0.001% of its cycles on this mode of thinking. In contrast, Intuitive Understanding is fast and is used for everything from sequencing our leg muscles as we are walking across the floor to language generation and understanding to enjoying a symphony. AI research to date has been focusing on this 0.001% of the brain's operations, neglecting the 99.999% that the brain spends on the Intuitive Understanding part."

This is where AGI is headed. Creating a real intelligent agent that can understand as we human does, without taking any models in domain and creating its own model.

AI research uses Models of Reality and is therefore just Programming. The Model of Reality can never be complete and therefore the AI is limited to operate in the problem domains defined by the Model. It is therefore not a General intelligence. These are also called Reductionist AI Systems, since Reductionism is exactly the use of Models. The most famous example of Reductionist AI systems is CYC ( Ideas for the future ) but most modern natural language processing is also Reductionist since they use grammars, word lists, dictionaries, and other Models of Language.

On the other hand, AGI research strives towards General intelligence. AGI systems therefore cannot use Models; they must be created using Model Free Methods; programmers supply NO models of any problem domains. AGI systems will create all their Models themselves, as needed and as encountered. They have mechanisms to determine "What matters" in any problem domain without necessarily knowing anything about the domain beforehand. This is known as "Domain Independent estimate of Saliency" and is one of the most important research topics in the field. Model Free Methods are used to create a learning substrate and that is the extent of programming required for them... they learn everything they know from their input senses. Model Free AGIs are typically small, these substrates only require about 10,000 lines of code but these machines require a lot of memory during their training phase.

AI is mostly "Symbolic" and AGI is always "Subsymbolic". AI uses logic whereas AGI must use Intuition. Intuition based AGIs can also be called "Understanding Machines".

If anyone want to move into AGI as a career then they owe it to themselves to fully understand the difference between Model Based AI and Model Free AGI since it allows them to avoid 90%+ of all AI books written since they generally deal with Model Based AI.

Conclusion.

So, to achieve what AI is trying to achieve we need better understanding of what is intelligence, learning, knowledge, reasoning, understanding, intuition, instinct, saliency, novelty, and the biggest one, Reduction.

To seriously narrow down many important design criteria for a true AI, and can point to methodologies for testing the strength of our AIs at low “syntactic” levels as well as higher “more semantic” levels. So we need to learn this AI Epistemology.

Note: Some of the content is taken as reference from various other sources.

Comments

Post a Comment